Volume 2, Year 2016 - Pages 1-13

DOI: 10.11159/ijecs.2016.001

VirtualEyez: Developing NFC Technology to Enable the Visually Impaired to Shop Independently

Mrim Alnfiai 1, 2, Srinivas Sampalli 1, Bonnie Mackay 1

1Dalhousie University, Faculty of Computer Science

6050 University Ave, Halifax, NS B3H 1W5, Canada

mrim@dal.ca; srini@cs.dal.ca;bmackay@cs.dal.ca

2Taif University, Taif, Saudi Arabia

Abstract - VirtualEyez is a low-cost mobile system that uses NFC tags (NTAG 203 tags) to help visually impaired people shop in a grocery store. While the main purpose of this system is to provide visually impaired people with greater independence when grocery shopping, anyone can use VirtualEyez to navigate a store and obtain product information. The prototype was designed to check product availability, generate optimal directions to products, and provide product information. In this paper, we describe the VirtualEyez system and discuss the results from a preliminary study where participants (visually impaired, partially impaired, and sighted) tried the prototype in a mock grocery store.

Keywords: NFC technology, visually impaired users, blind users, grocery store.

© Copyright 2016 Authors - This is an Open Access article published under the Creative Commons Attribution License terms. Unrestricted use, distribution, and reproduction in any medium are permitted, provided the original work is properly cited.

Date Received: 2016-05-26

Date Accepted: 2016-09-23

Date Published: 2016-10-27

1. Introduction

According to the World Health Organization (2012), there are currently 285 million blind and visually impaired people worldwide, 39 million of whom are considered legally blind and 246 million of whom have sufficiently low vision to be considered visually impaired [1]. Visual impairment has increased in prevalence over the past three decades, with 90% of visually impaired people living in developing countries [1]. However, visual impairment is common in developed countries, as well. The Canadian National Institute for the Blind (CNIB) has reported that, every 12 minutes, someone in Canada begins to lose his or her eyesight. According to the CNIB, the widespread occurrence of vision loss makes it "very likely that you or someone you love may face vision loss due to age-related macular degeneration, cataracts, diabetes-related eye disease, glaucoma or other eye disorders" [2].

Most visually impaired people have difficulties not only with things like reading, but also with basic daily activities such as riding the bus, identifying locations, and purchasing groceries. Not surprisingly, there is a great desire among visually impaired people to become more autonomous so that they can accomplish these tasks independently and without human assistance. Nonetheless, it is common for visually impaired people to relinquish responsibility for personal finances, shopping and the like, and they are often thought by sighted people to be unable and/or unwilling to accomplish everyday tasks on their own. In reality, most visually impaired people strive to gain or retain independence in their daily lives and to seek hobbies and interests that will allow them to lead a full life. Indeed, as one participant, who has been blind since the age of seven, has stated, "independence in one's daily activities, without requiring/requesting assistance from a sighted person, is of the highest priority" [3]. This emphasizes the need to build systems that help visually impaired and blind people become more independent.

One activity that the visually impaired finds challenging is shopping, as blind and visually impaired people are often unable to find the items they want in stores. Many retailers offer online shopping, but this process may be difficult and time-consuming. For example, visually impaired people have to listen to all possible choices before they can choose the product they want. Even worse, if they miss the item they want, they have to listen to the entire list again [4]. Some organizations also offer home delivery, but even when available, the service sometimes requires the customer to make an appointment. These alternatives limit personal autonomy and make independent shopping difficult. As a result, blind and visually impaired people often avoid procuring these services at all, further degrading their quality of life.

To shop in a grocery store, blind people often have to ask a grocery employee for help or hire someone to assist them [5]. Not all grocery stores assign an employee to accompany a person with vision loss around the store, and hiring an assistant can be expensive. In short, the currently available solutions for these problems related to visual impairment are not satisfactory.

This paper will describe the development process of a smart technology designed to enable visually impaired individuals to shop at their own convenience using an android phone with Near Field Communication (NFC) tags. Typically, the utilization of smart phone features by the visually impaired has been very limited due to the user interfaces only being accessible through LCD screens [6]. Although they may need to memorize functions or rely on sighted assistance for some features, many visually impaired people own and use smart phones [6]. With the addition of appropriate accessibility services such as speech-access software, people with visual disabilities are better able to navigate between the applications and interfaces [6].

Our system, called VirtualEyez, helps visually impaired individuals navigate a store to help them locate items of interest. It indicates the shortest route to reach the product being sought, which also makes this system potentially useful not just for the visually impaired but for any shopper. The system also provides product identification and information (e.g., price) after the customers have reached their desired items.

The rest of this paper is organized as follows. We discuss the related work and then describe the system design for developing an NFC application. As well, we outline some general results from the initial evaluation of the system by real users (sighted and visually impaired). Finally, we present the conclusion and possible avenues for future work.

2. Related Work

Many different types of assistive shopping systems have been developed to help blind and visually impaired people grocery shop independently. For example, Robo-Cart uses a robotic shopping assistant [7]. It guides users in a grocery store using a Pioneer 2DX robot embedded with a laser range finder and RFID reader to navigate the visually impaired shopper to the location of the products. The robot follows line patterns on the floor with the scanner's camera to navigate within a grocery store, and the shopper uses a hand-held barcode scanner to identify a product. Installing lines on the floor of stores is a reliable and inexpensive alternative to using RFID tags [7] [8].

Ivanov [9] developed an indoor navigation system combining mobile terminals and a Java program with access to RFID tags. It offers a map of the room that includes dimensions and the relative positions of points of interest. In this application, RFID tags contain the building information. The system permits audio messages that are stored in RFID tags and recorded in the Adaptive Multi Rate (AMR) format to enable visually impaired individuals to listen to the direction commands after touching the tags. The advantages of this system include that the locations of RFID tags are easy to find because the tags are located near the door handles, with each door serving as a reference point for all points inside the room. Each tag has information about the location of all reference points inside the room. This system also allows blind users to rely on their white cane to overcome any obstacles in their way. It supports audio navigation and interacts with users' movements in a reasonable amount of time. The major disadvantages of this system are that it requires a web server and the cost of the RFID tags is too high for most visually impaired or blind people, whose incomes are generally modest.

An assistive shopping system that is relatively low-cost is BlindShopping [10] [11], which is a mobile system that uses an RFID reader embedded on the tip of a white cane to guide users at a supermarket. The RFID tags are attached to the supermarket floor. It also uses embossed QR codes posted on product shelves to enable users to use their Smartphone camera to recognize and identify products of interest. For system configuration, BlindShopping requires a web-based management component that produces barcode tags for the shelf [10] [11]. The main advantage of this system is that a supermarket does not have to go through costly and time-consuming installation and maintenance processes. As well, users who use the BlindShopping system do not need to carry additional gadgets. However, it is difficult for blind people to use a mobile phone to read QR-codes because it requires direct line-of-sight and precise direction. Additionally, a blind user must send the product image to a remote database on the Web to identify it, which requires both a significant amount of time and Internet access, the latter which can be costly for some individuals and the former which can be frustrating.

iCare [12] uses an RFID reader attached to a glove to help users find what they need when shopping. The system uses Wi-Fi to connect to a product database. Each product also has RFID tags so that visually impaired people can read product names simply by touching the product with the glove [12]. Similarly, Trinetra [3] is an identification system that uses a Windows-based server, a Nokia mobile phone, a Bluetooth headset, a Baracoda IDBlue Pen (for scanning RFID tags), and a Baracoda Pencil (for scanning barcodes). In handling both barcodes and RFID tags, Trinetra enables customers to use whichever identification technology is available. The overall objective of the Trinetra system is to retrieve a product's name when the user scans a barcode tag, helping the user to recognize and identify the item. The system does not support indoor navigation features, which means the user is responsible for finding the product's target location. In fact, the user does not have the ability to perform an efficient search for a target product location. For example, if a supermarket has 45,000 products, it may not be possible for the user to find a specific product in a supermarket without any navigation route or search directions.

There have been some recent examples of NFC-associated systems being designed for use in different areas. For example, Mobile Sales Assistant (MSA) [13] has been implemented in a clothing store. This system combines NFC and the Electronic Product Code (EPC) to optimize and speed up the sales process in retail stores by permitting customers to check the availability and supply of products at the point of sale. MSA may increase customer satisfaction as well as sales. Another example is a system called HearMe [14], which is used to assist visually impaired and older users to identify medication and product information (e.g., dosage) by transforming information from the package (encoded in NFC tags) into speech [14]. This app reduces the reliance on medical employees and family members to remind the patient of scheduled medication. Similarly, the French supermarket chain Casino has examined using NFC-enabled phones to help customers easily acquire product information (e.g., product name, price and ingredients) by reading the stored information on NFC tags [15]. However, because there is no indoor navigation system, customers have to touch products in the supermarket randomly until they find what they are looking for.

3. System Design

The VirtualEyez system was developed using:

- A Google Nexus 7 tablet with an Android 4.3 platform as an NFC reader

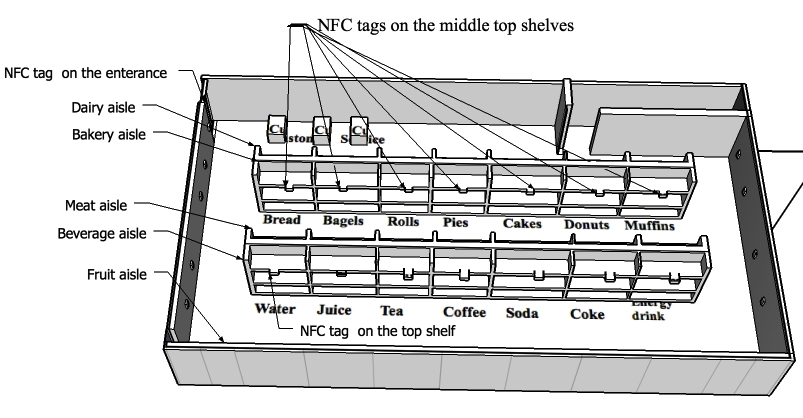

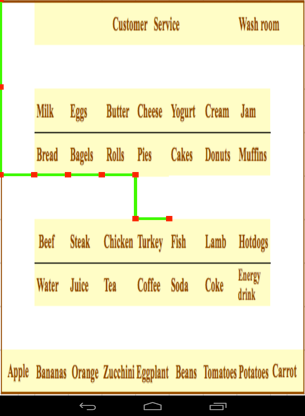

- Passive NFC tags (NTAG 203 tags) deployed on every top shelf in the grocery store, 100 cm apart from each other, and at the entrance/exit (Figure 1), and a database containing product information and product location tables.

NFC tags serve two essential functions. First, they allow for an automatic launch that eliminates the need for users to figure out where the app is on the mobile screen. Second, NFC tags allow the application to identify and keep track of the shopper's current location. To acquire this information, the mobile device will read the content of the NFC tag (Point ID) and send it to the app as a source node.

- A SQLite database that stores the building's layout (geometry for each position point in the floor of the grocery store) as well as the products available in the grocery store.The system uses an SQLite database to keep track of relational data associated with the NFC tags. For instance, the NFC tag with the ID 3 was associated with the section named "Tea". The database has a point table with grocery store map information, which contained one row for each NFC tag. These rows have columns for the point ID (NFC ID), the location name, and the point's neighbors (the upper point, left point, right point, and the down point). The NFC with identification code 3 also connected to 3 rows in product table. This means that each row in the point table is connected with 3 rows in the product table.

The VirtualEyez system's operating procedure consists of four fundamental functions:input of the item name (via voice recording or the phone's keyboard), check of its availability, navigation within the store, and product identification.

3.1. Assumptions

The

VirtualEyez system was designed based on the following assumptions:

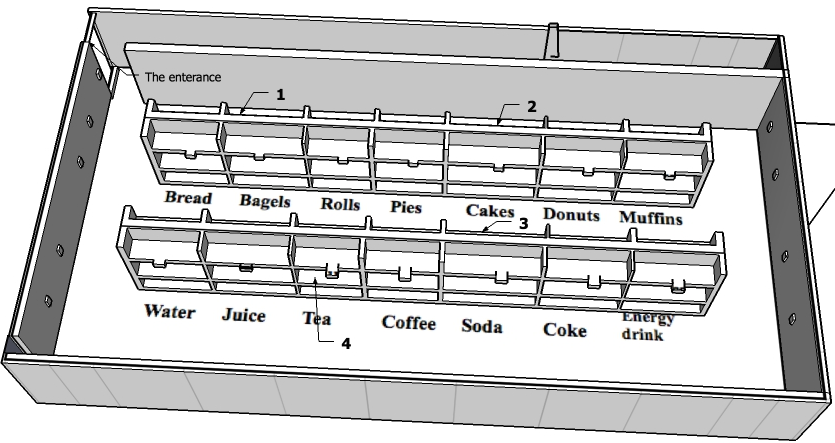

- The virtual store in the CNIB center would be built using groups of bookshelves organized as aisles (seven bookshelves per aisle).

- Visually impaired people could use a guide dog or a cane to enable them to identify any obstacles in their way.

3.2. System Functions

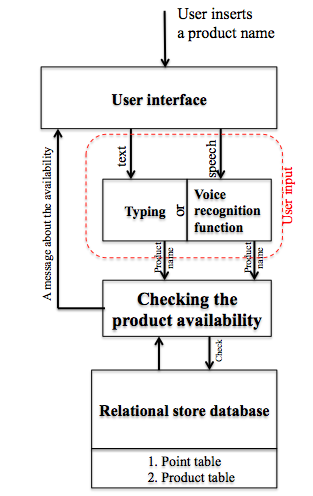

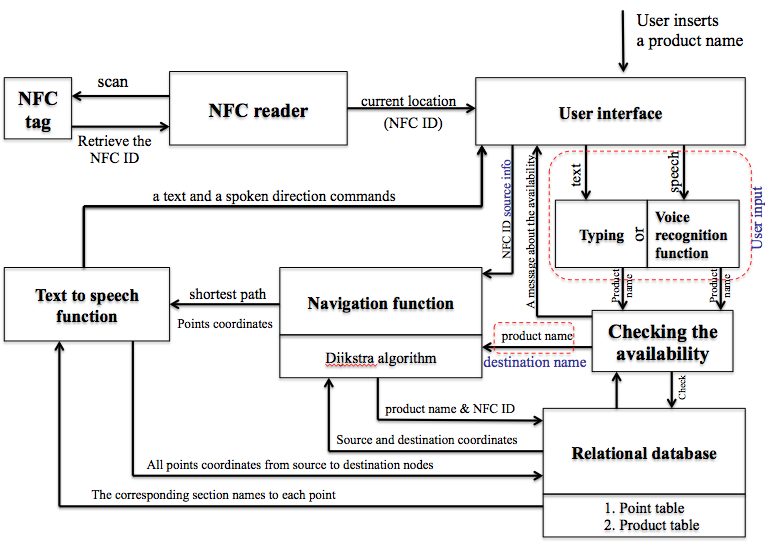

1) Inputting and checking the availability of the desired productThis task is achieved by carrying out four sequential steps (Figure 2). Each step is critical to checking availability, and the steps are interdependent. After the shopper inputs his desired item, the application will immediately perform the remaining three steps. These four steps will be explained in detail in the following subsections.

Step 1: Enable customers to input their item

This application provides two options for the shopper to insert his/her desired item. It enables the customers to record their desired items via voice recognition, with customers speaking to their Smartphone devices in order to record their chosen items in the SQLite database. By using the speech-to-text service, the voice of the shopper will be converted to text. The second option is that the customers can type their selected items using a Smartphone keyboard. If the visually impaired shoppers prefer to use this option, they should use the TalkBack service.

Step 2: Send the inserted product to the database

After recording the desired item using voice recognition, the application will immediately send the inputted name to compare and match it with a product name in the store database.

Steps 3 and 4: Check the availability of the chosen item and retrieve an alert message

After sending the chosen item, the app accesses the store database to check whether the desired item is in the grocery store or not. If the item is not in the database, the application will deliver the message "The product is not in the store." If the desired item is in the database, the application will receive the alert message "The item is in the store", which confirms item's the availability. At the same time, the text-to-speech service will read aloud the alert message for the benefit of shoppers with low vision. In immediately checking whether the product is on hand, this step minimizes wasted time. The application then presents the location of the item on a map and verbally states the direction commands.

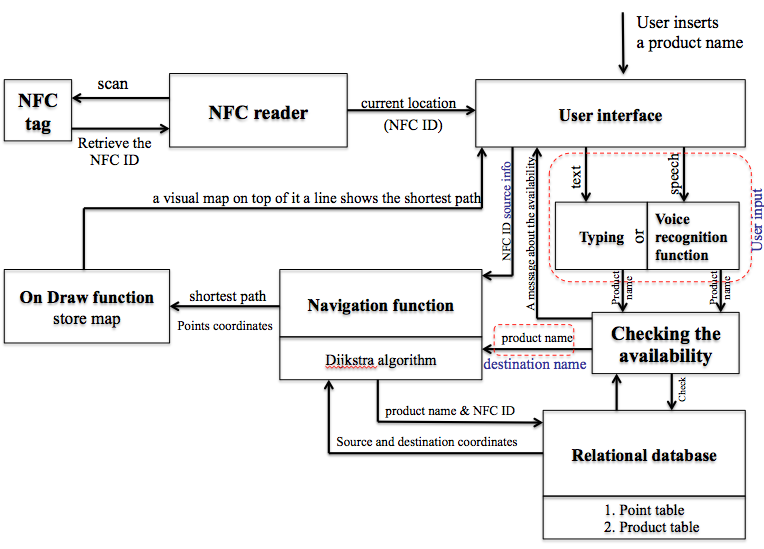

2) Indoor Navigation System FunctionThe VirtualEyez application also provides indoor navigation guidance using NFC tags. This function begins immediately after receiving the result (an alert message) that the product in question is available. At that time, the application allows a shopper to receive direction commands to his/her selected item. The shopper can also update his/her direction commands by tapping the NFC tags, which are located throughout the store, enabling the application to obtain the user's location and update the route. In addition, the application allows the shopper to know where he is, speaking the name of the section when an NFC tag is tapped. The indoor navigation system has four steps: Some of these steps rely on the input value (product name) provided by checking the availability function.

Step 1: Scanning NFC tags

Each NFC tag has point position information, which is written using the Tag writer application. Any NFC-capable mobile device, in reading the contents of NFC tags, will acquire the user's location. For example, in the milk section, an NFC tag would be located on the top middle shelf. The user has to tap his/her NFC device near the tag to permit the reader to read the content of that tag.

Step 2: Retrieving the location of the chosen item

After sending the NFC ID (source node) and the name of the chosen item (product name) to the app, the desired item's location information (node coordinators) will be downloaded to the mobile phone from the grocery store database.

Step 3: Applying routing algorithm

The routing algorithm used in this application is Dijsktra's algorithm, which is used for finding the shortest route between two locations. A route between two points will be represented by an array matrix, which has all the possible points that the route, from source to destination, will pass through. The application receives the input values (source node, destination node) in order to perform the Dijkstra algorithm. This process will be described in the following sub-sections.

Step 4: Representing a visual or audible map

After determining the best route, the system returns an array list with the nodes that should be passed through in order to reach the destination. VirtualEyez provides navigation guidance by combining visual and audio directions during navigation inside a grocery store. While low-vision and sighted people can follow both visual and audio directions, blind shoppers are entirely dependent on audio direction.

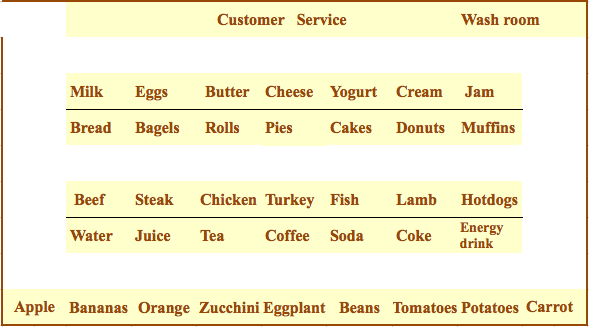

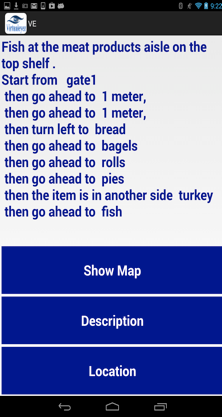

A visual map is the most important element in a navigation system, as it allows the user to easily see his/her current location and the path to his/her destination. We have used a two-dimensional map, in that the grocery store has been represented as an image with nodes and edges. Thus, each location in the store is represented by a node indicating positional information, and between any two points there is a line that represents how the shopper can move from his current location to his destination. As shown in Figure 3, the grocery store map has six aisles and seven sections in each aisle, so that there are seven nodes in each aisle.

The user interface of the indoor map system facilitates high usability of the indoor navigational system through the functionality of displaying the store map and finding the optimal route. The map is represented in a simple way so that shoppers can see the whole store, and the route between the current location and target item is represented with a bold green line to enable people with low vision see the path clearly. Figure 4 shows that after applying Dijkstra's algorithm, the On Draw function will receive all of the point coordinates that the user has to pass from his current location to the selected item. The On Draw function will receive all of the point coordinates that the user has to pass from his current location to the selected item. The On Draw function uses the two-dimensional grocery store map image as a background and then draws a line on top of the image based on the point coordinates from the source node to destination.

The application provides the direction commands as a two-part text message. The first part has the name of the selected product, as well as the name of the aisle and position of shelf (bottom, top, or middle) containing the product. The second part of the message has the direction commands (go ahead, turn left, and turn right) from the current location to the selected item. VirtualEyez also provides an audio map in order to assist people with no vision. In order to obtain the audio map, the TTS service is employed in order to read the text message.

Figure 5 presents the result of applying the Dijkstra algorithm to find the shortest path from the source point to the destination point. It shows an array containing all point coordinates that the user has to pass to move from his current location to the selected item. This result is an input into the text-to-speech function, thus the TTS function will fetch the corresponding section name to each point coordinate. The TTS then sends the direction commands to the user interface as both text and voice.

The audio map also has the capability of informing shoppers of their exact location at any point, which is of great value, considering the limited capacity to acquire such information from landmarks. To find his or her exact location, a shopper simply taps the nearest NFC tag in order to read its unique ID, and then the app will immediately fetch the NFC ID. After that, the app will send the fetched NFC ID as a query to the store database to return back to the customer the location name that matches the fetched NFC ID.

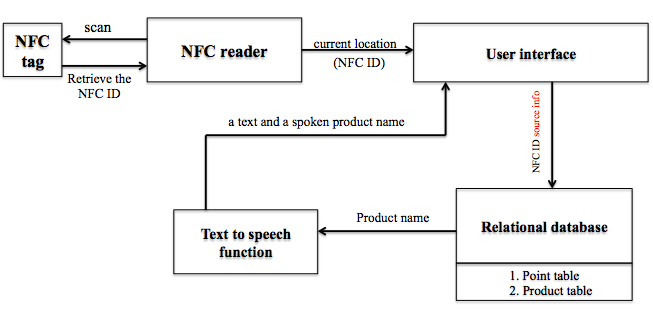

3) Product identificationProduct identification is a vital step in helping people with visual disabilities to identify their selected items. The aim of this function is to provide shoppers with confirmation that they have reached the correct section and, more importantly, have selected the correct item.

Figure 6 shows that when the user taps the NFC tag, the application will immediately read aloud the product name and allow access to relevant information about the product. When shoppers record or type the name of a desired product, the application will fetch the general information for the chosen product from the store database. Each product in the grocery store database has general information, such as price, expiry date, ingredients and nutrition facts. The application displays item information as an audio message, so shoppers with low vision can hear it. They can press the "description" button several times to hear the product information repeatedly.

4. VirtualEyez application interface

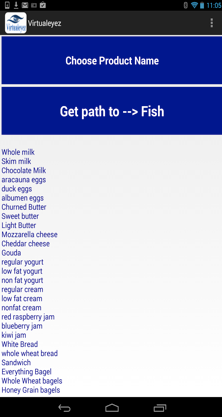

The application, which is installed in a shopper's android device, has three sequential views (as shown in Figure 7), each with a particular function.

Figure 7. VirtualEyez interfaces.

The first interface shows two large buttons labelled as "Choose product name" and "Get path". Clicking on the "Choose product" button in the VirtualEyez application interface (see a) brings the user to the recording screen, which runs the speech recognition service from Google, enabling customers to record the name of a desired item. In response, the application will retrieve an alert message to inform the customer of the selected item's availability. By clicking on the "Get path to selected item" button (see a), the user proceeds to the path screen. When a shopper scans a passive NFC tag, its unique ID acts as an input for the shortest route algorithm. It then fetches the source (the shopper's current location) and target item ID from the database table and executes Dijkstra's algorithm to find the shortest path. The shortest path is returned in the form of an array, which includes node IDs to be traversed in order to reach the desired item. Once the shortest path is determined, it shows as a text message on the screen (see b) or is sent to the On Draw function to overlay it on top of the map image (see c). When customers reach their selected items, they touch the NFC tag on the top shelf to confirm that they have reached the correct location.

5. Experiment design

To examine the usability of the VirtualEyez system, we ran an initial study to obtain user feedback. We were interested in learning how useful and usable the system was for grocery shopping, and we were also looking for suggestions to improve the system. We set up a mock grocery store in the Canadian National Institute for the Blind (CNIB) office. This mock grocery store had four aisles with several products on the shelves to make the shopping experience realistic (Figure 8). The participants were asked to buy four products, which were located on different shelves and locations within the shelves (e.g., on the top, on the bottom, and in the middle).

5.1. Recruitment of participants

The participants were recruited in Halifax, NS, with the assistance of the local CNIB centre. We sent out a recruitment email to CNIB clients and volunteers to inform them about the goal of the study.

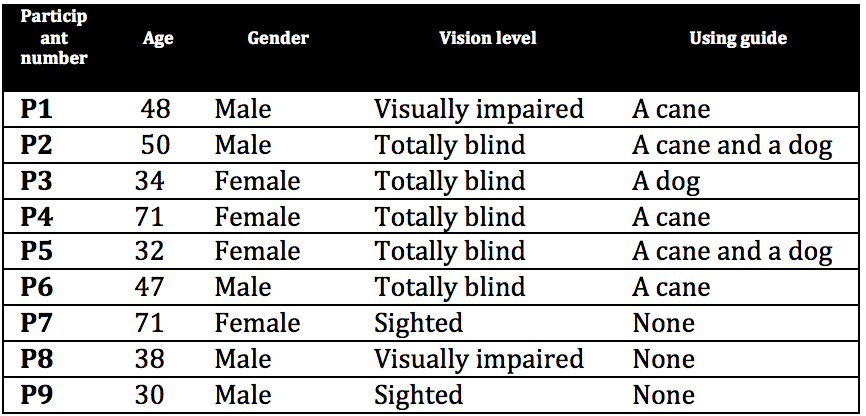

Participants were selected based on age (over 18 years old), visual impairment level and smartphone experience. Participants included not just the visually impaired but also individuals with normal sight to collect a variety of opinions regarding the system. We conducted our study with 9 participants with different levels of vision: two partially sighted participants (2 males aged 38-48), five blind participants (2 males and 3 females aged 32-71) and two sighted participants (1 male and 1 female aged 29-71) (as shown in Table 2).

5.2. Tasks

During the information session where we explained the main concept of the VirtualEyez system, the study and its objectives, and the study steps. The study itself took about 45 minutes to complete per a participant. During the experiment participants performed the following tasks:

- The participants used a mobile device to scan the NFC Tag, which was placed at the entrance of the store.

- The participants recorded his/her shopping purchase in the smartphone app using voice recognition or by typing the list.

- The participants followed the path on the map to reach their items. The map is supported by voice commands to help people with visual impairments navigate through the store.

- If the participants got lost, they could touch any NFC tags, which are prominently displayed in the grocery store, to update his/her path. Blind people could touch any walls, aisles or shelves near them in order to find the prominent NFC tags and then scan it with their device.

- Participants could obtain the exact locations of the selected items by listening to the audio message (e.g. the item is on the top shelf).

- The participants were also able to listen to or read general information about their items (e.g., color, size, and ingredients), and find nutrition facts information. This is because each shelf has an NFC tag and the application can read it when the customer scans the tag.

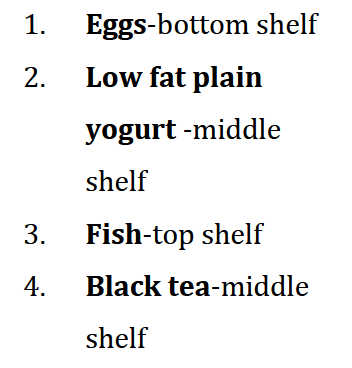

5.3. Study Description

Each participant was directed to the front of the grocery store (near the entrance) and was given a plastic bag to carry. Each participant was then asked to buy four items from different locations within the store. For example, eggs were located on the bottom shelf, plain yogurt was located on the middle shelf, fish was located on the top shelf, and black tea was located on the middle shelf (see figure 9). We asked participants to buy eggs, plain yogurt, fish and black tea respectively. All participants were required to shop for the same products in the same order. We did not control for order during performance of the task. In the case of blind participants, individuals were allowed to use an aide (e.g., guide dog or white cane) after receiving directions from their smartphones. Each participant had a bag while performing the task. After the participants bought the 4 chosen items from the mock grocery store, each participant answered the interview questions regarding their opinion about the application.

During the experiment, when the participant picked up all four items, the researcher checked that he/she had selected the correct 4 items. The researcher took notes regarding where the participants had faced difficult times in achieving the tasks.

5.4. System Evaluation

In order to evaluate the usability of the VirtualEyez system, we collected qualitative data using three main data collection methods:

- The pre-study questionnaire had 3 main sections. The first section contained demographic questions, such as age, gender and vision level. The second section focused on the difficulties that the participants face while buying their groceries, and the third section pertained to the solutions that they have used to overcome these difficulties.

- Observational notes were used when the participants were asked to buy the 4 items. During the tasks, the researcher took notes and no video was recorded. The observational notes were used to further help us identify any difficulties that the participants had when collecting an item from the top, middle and/or bottom shelf. It also used to identify any request for assistance during the experiment.

- The post-study interviews were audio taped) and used to obtain user feedback about the application and the users' level of satisfaction. In particular, the interview sought to get specific participant opinions on the VirtualEyez system as well as their suggestions for improving the application.

Overall, the interview and notes indicated the level of satisfaction with the application among sighted, visually impaired, and blind individuals, and provided critical indications about how the application might be improved in order to make it most effective.

6. Results

We used qualitative methods to assess the application.Several factors were considered with regard to their possible effect on the usability of the VirtualEyez system, including age, gender, vision level, and navigation assistance (a cane, a dog, both or none). Consideration was also given to participants' usual difficulties while buying groceries and their current use of supportive systems and accessibility services. In addition to the data generated by the questionnaires and interviews, we also noted whether or not participants asked for assistance during the experiment.

6.1. Pre-study questionnaire

The pre-study questionnaire asked participants about the participants' demographic data including age, gender, vision level and whether the participants use a guide during navigation indoors. It also inquired about the main challenges facing participants while grocery shopping and how they overcome these challenges. The last part of the questionnaire asked about how participants interact with their mobile phone and which types of accessibility services they use.

Only one visually impaired participant used a cane to get around and to help him avoid obstacles. The other visually impaired participant does not use a cane because he has enough vision to see the obstacles in his way. Two of the blind participants can use either a cane or a guide dog to navigate. During the study two blind participants used a dog to navigate through the store to help them avoid obstacles. The remaining totally blind participant used a cane.

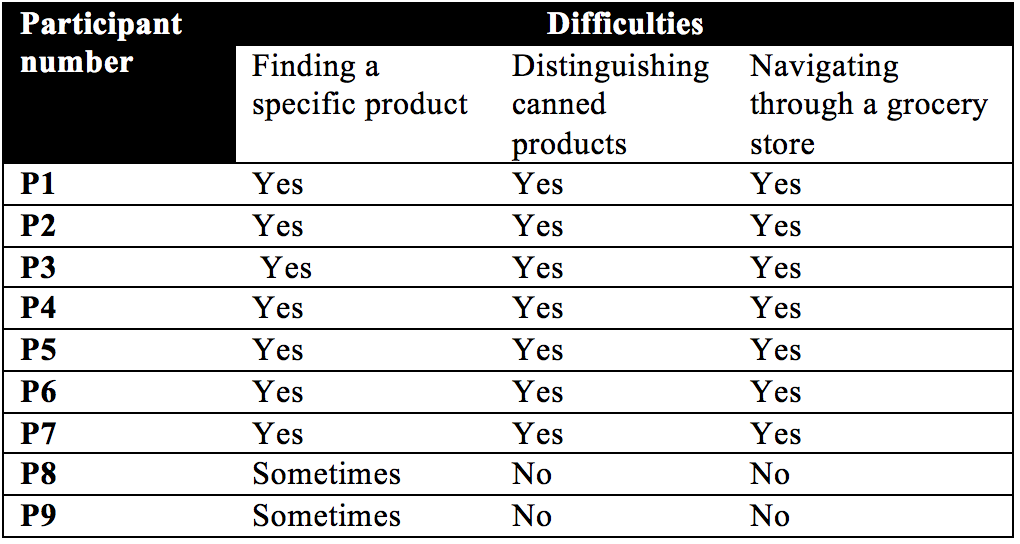

The questionnaire revealed a number of trends regarding grocery-shopping difficulties (see Table 3):

- The visually impaired and blind participants had difficulty finding specific items quickly in a grocery store. Sometimes the sighted participants faced this type of challenge as well.

- Both the visually impaired and blind participants found it difficult to recognize and distinguish different canned products.

- One visually impaired participant and all blind participants had difficulty navigating through grocery stores. The second visually impaired person stated that he has enough vision to see which aisle he is in and the obstacles in his way.

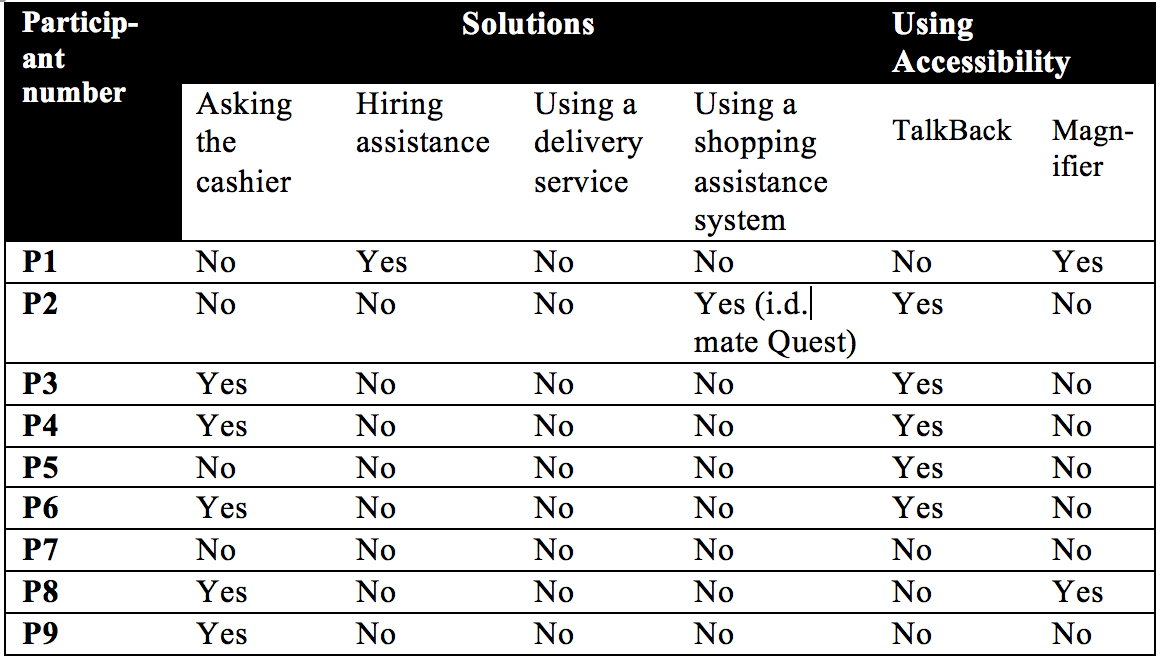

The questionnaire revealed a number of trends regarding how participants address these challenges:

- One visually impaired participant prefers not to ask customer service for help to find his items due to the long wait that can be involved. He stated that because he looks like a normal person, when he asks for assistance to find a product, the clerk tells which aisle the item is in, requiring him to tell him or her that he is visually impaired and needs someone to show him the exact place. On the other hand, the second visually impaired person usually asks for help from the clerk, especially if the product is new, and he said that he too has to tell the clerk in the store that he is visually impaired because he appears to have normal vision. While three of the blind participants regularly ask for such assistance, the other two do not.

- Surprisingly, all participants reported that hiring assistants and using delivery services are not good solutions for doing grocery shopping, primarily for financial reasons.

- Only one totally blind participant had used a supportive system at home and he had never used it in grocery stores. The system is called i.d. mate Quest, which is a barcode scanner that helps visually impaired people to identify items by scanning the item's barcode. This system uses both text-to-speech and voice recording technologies (En-Vision America, 2014).

- All visually impaired participants used a magnifier service to enlarge the size of text on the screen and more easily read the content of messages and button labels, and all the totally blind used the TalkBack service to interact with the devices.

- Not surprisingly, most blind individuals use voice recognition, sometimes in combination with typing, to enter product information, while the others relied primarily on typing.

6.2. Observational notes

The notes show that no participants faced difficulty finding the exact location of the product (on top, middle and bottom shelves). One participant said that the app addressed the problem of finding items at low and high levels.

6.3. Interviews

Interview responses revealed overall satisfaction with the application and its effectiveness as a shopping aide.

1. ButtonsThe visually impaired participants found the buttons to be well organized and easy to find. Participant 1 stated, "there were not many buttons. It is a simple interface. The button size was great. The color was good. Good contrast" and participant 8 said "the buttons were large and I could actually see them clearly." He also suggested placing one button on each page to make it large enough for them to see. They also found that the function of each button was easy to understand. The totally blind participants also found the buttons were easy to find with the support of the TalkBack service, with the recommendation of placing the buttons in two rows at the bottom of the screen. Participant 2, who had no vision, suggested eliminating the location button and letting the shoppers obtain the spoken direction commands automatically after touching the NFC tags. Participant 6 stated, "the function of each button was easy to understand. The app spoke the name of the button and then basically the name explains what it did". Sighted people also found them to be well organized. One sighted participant liked the fact that the app provided two ways (visual and auditory) to find the buttons.

2. Visual MapThe sighted people preferred to use the visual map, and they found it easy to follow. They also found the text sizes of the button label, product information and alert message contents on the mobile screen were clear, as were the colors.

3. SoundThe visually impaired participants found the sound used in the VirtualEyez system was clear and easy to follow, but they found it to be too slow. Four of the blind participants also found the sound was clear, but wanted the ability to be able to adjust the sound speed. The fifth blind participant (participant 4) found the sound was unclear due to a hearing problem; he could hear the app when it read one word like the name of the section, but he could not hear the app while it read the direction commands.

4. NFC tags locationsFollowing the direction commands and NFC tags was easy for all participants, especially because the tags are located at eye level. Participant 1, who is visually impaired, found locating the NFC tags on the top shelves was quite easy because he noticed that the shelf color was black and the NFC tag color was white, and this contrast made it possible to locate the tags visually.

The two participants who are visually impaired felt that they bought their desired items faster than usual. Participant 1 said, "If it is something new I have never purchased, I can spend an hour looking."Participant 9, who is a sighted participant reported that "I cannot say it is faster or not because the store here is small."

6.4. The advantages and disadvantages of the VirtualEyez system based on participants' feedback

All participants reported that the VirtualEyez system assisted them to collect the four chosen items in an independent manner. Participant 5 said "The app gave me very specific directions where the items are located, so including what items I would pass to get to it. If I use it in the grocery store, I would not have to ask anyone for help."Participant 8 stated, "I find it really challenging see prices and labels. By using this app I can do it faster. Usually I only get things I know how much they are." Participant 7, who is a sighted person, reported that sometimes she faces a challenge to find an item after the items' locations are changed. By using this system, the store would have to keep the item in the same place, which would definitely address her challenge.

All participants found the user interface was easy to use. Participant 1, who is visually impaired participant found the TalkBack service helpful and reported that using it several times would assist him in memorizing the location of the buttons. Participant 8 prefers to use the magnifier (Zoom) to enlarge the text size on the screen. Participant 2, who is bind person, stated that "It was not easy at first, the dots helped me." He suggested bringing the buttons nearer to each other, so users do not have to jump from the top of the screen to the bottom.

When asked about the benefits of the system, all participants found that it will aid shoppers in two ways: increased independence. The visually impaired and totally blind participants felt that this app has the potential to help people with low vision to make decisions regarding what they want to buy because this system will tell them the price and nutrition facts via the description button. They also found the location button was helpful as it tells them what products they pass by, potentially reminding them of items they have forgotten. Participant 4 reported, "I never go shopping by myself. This app is helpful" and participant 5 stated that "I think the biggest benefits, it would allow me to shop independently, without searching for the barcode, without requiring assistance from the store staff, which sometimes take a long time. Once I use it in the store it will help me a lot because I'll get used to it and the layout. Then it will be so easy for me to navigate and find the product." Participant 2, who is totally blind, liked that the app gives such explicit location directions, including which shelf level. Participant 7, who is a sighted participant liked the app because it told her what is inside the store, so she did not have to spend time looking for items that may not be in stock.

The two main concerns for visually impaired people are knowing the price of the items and saving their time. After using the app, all visually impaired participants reported that the VirtualEyez system addresses their grocery shopping concerns, since scanning barcodes will not tell them the price and other information. A visually impaired person (participant 8) stated that using this app in grocery stores is better than using it other stores because grocery stores have so many products that it is difficult to shop.

The system also addressed the concerns of the totally blind regarding grocery shopping. Participant 5 also reported, "It addresses my concerns about the price and nutrition facts, which I was not really expecting. It tells me what is in the aisle, so if you want to browse the aisle you can touch the tag to know exactly what type of items are in the aisle."

The experiment was designed to measure the weaknesses and strengths of the VirtualEyez system, and it did succeed in highlighting some issues faced by the participants while using the system. The most significant issue brought up by three blind participants during the interview was that there is no system to help them avoid obstacles. In a real store, the employees usually put some sale boxes and new stock in the aisle; participants noted that a successful system must have a way to overcome that. Another issue is that blind participants have to a carry mobile phone in addition to a shopping basket, which could prove difficult in combination with a cane or a guide dog. Thus, it may be necessary to reduce the physical burdens in the system in order to accommodate those with such visual aids. One difficulty faced by a visually impaired participant (participant 8) was understanding the voice, mainly because he usually uses magnifier to enlarge the text and never uses the TalkBack service. Participant 3, who is a totally blind participant, found that the speech recognition service did not work perfectly, such that she had to repeat the product name until the system successfully registered it.

6.5. Discussion

The VirtualEyez is a proof-of-concept prototype designed to be implemented in grocery stores for the benefit of shoppers, especially those with visual impairments. The purpose of this study was to test the VirtualEyez system in a grocery shopping environment in order to determine whether or not it effectively helps visually impaired shoppers find items, and to identify the system's strengths and weaknesses. The overall impression of the VirtualEyez system provided by participants following the experiment was positive, with shoppers indicating that it was easy to use and helped them in their grocery shopping.

The overall results indicate a serious need to enhance the app user interface to be straightforward to easily serve blind and visually impaired people. The results of the pre-study questionnaire indicate that there is a need to design an assistive shopping system to help visually impaired and totally blind shoppers overcome the challenges they usually encounter when buying items. As they stated, most solutions they use do not meet their demands. Participants also said that they consider time and cost of delivery service, hiring assistants and shopping assistive system to be the most important factors, therefore they do not prefer to use these solutions. We should therefore consider these two factors carefully when improving the system.

The observational notes show that eight participants bought the 4 chosen items without any difficulty and without asking for help. Although the one participant with both a vision and a hearing impairment (participant 4) asked for assistance, that indicates a new direction for future work - to enhance the system for people with multiple disabilities by using vibration. In addition, the observational notes indicate that the VirtualEyez app successfully addresses the issue of locating the product on different shelves (top, middle or bottom).

The VirtualEyez system has features existing shopping assistance systems do not have, such as using users' familiar smartphones, carrying less technology, scanning NFC tags, gaining immediate update to direction commands, and providing both an identification and navigation system to help overcome other system limitations. For example, the Trinetra system asked the blind users to locate the barcode on a grocery product - a task that is very challenging because the user cannot find the barcode by touch. In addition, the systems that use barcode readers do not provide information about the price of the products.Indeed, one of the best aspects of this system is that it requires no extra equipment (e.g., RFID or barcode reader). With many existing systems, the user must wait for the identity of the product to be sent to a remote server; the VirtualEyez system avoids this potential delay by providing immediate responses via NFC tags. This system also has the advantage of lower cost compared to other systems because of the use of inexpensive passive NFC tags. Lastly, VirtualEyez may assist various groups of people - not just blind and visually impaired individuals but also those who are sighted.

6.5.1. Limitations

The ability to draw firm and broad-ranging conclusions from this study is inhibited by its various limitations. For example, as one sighted participant pointed out, the mock grocery store used was small, with only four aisles. Additionally, the task of finding four items is unrealistically small compared to how many things one buys on a typical grocery store visit. It is likely that testing the application in a real store would reveal some other issues and challenges, possibly related to differences not reflected in the mock store (e.g., the doors - rather than shelves - of the refrigerated sections). Perhaps the most notable limitation of the current study is the small sample size: with only nine participants across three different conditions, it is virtually impossible to draw generalizable conclusions or get an exhaustive overview of what works well and poorly.

While the VirtualEyez system may benefit a number of populations - not just visually impaired and blind people but also sighted individuals -it may not be of use to individuals with other deficits in addition to vision problems. For example, one of the participants in this study, who had both a visual and a hearing impairment, was unable to take full advantage of the system and resorted to random searching for the products. Thus, VirtualEyez may not help individuals with problems that interfere with their ability to utilize the system's functionality.

As stated above, the small number of participants in each group prevents generalization of these findings to all shoppers with low or no vision. Nevertheless, we have demonstrated VirtualEyez to be a usable system that aids shoppers with vision problems. Indeed, all participants successfully found the four chosen items in the experiment session, although with different time durations that correlated somewhat with their degree of visual impairment as well as their age. Evidence from this study also suggests that utilization of the app will improve with practice, so the benefits to shoppers may be potentially greater than what was observed here.

6.5.2 Design Recommendation

Designing an app for blind users:

- Make it possible for the user to adjust the reading speed. The reading speed should not be fixed and can be varied depending on the user.

- Use TalkBack service and Text-to-Speech service to enable blind users to access mobile screen content.

- Use periods, semi-colons, and commas inside content that is represented on the mobile screen to allow the reader voice to pause between different pieces of information to make it more understandable.

- Add a voice recognition service makes inputting text easier and faster for people with no vision.

- Increase object size (large buttons) to aid people with poor or no vision since they may be less precise in pointing to specific parts of the mobile screen.

7. Conclusion and future work

Visual impairment, on the rise throughout the world, impacts all aspects of people's lives, including such routine tasks as grocery shopping. Thus, there is a growing need for assisted shopping solutions with the potential to improve the independence - and, therefore, quality of life - of visually impaired individuals. This paper proposed a novel system, VirtualEyez, to help people with vision disabilities navigate through a supermarket and locate their products.

One of the most novel aspects of this system - and this study - is the use of NFC technology, which provides a reliable, low cost indoor navigation system as well as an identification system.

The VirtualEyez system was evaluated in a mock grocery store setting set up within the CNIB center. The evaluation helped us identify the features of the VirtulEyez prototype and provide information for the developers. The study also found that audio and visual data received from scanning NFC tags may assist users for shopping.

The main contribution of our research is using existing technologies (e.g. NFC tags and mobile devices) to create a low-cost proof of concept mobile application to help blind and visually impaired people overcome some of the barriers they face while grocery shopping. The initial evaluation received positive feedback and provided some improvements for the system. Based on this evaluation we have suggested 3-4 design recommendations useful in creating apps for blind and visually impaired users.

There are various ways in which VirtualEyez could be expanded upon in order to increase its usefulness. The VirtualEyez system currently works only for a single door layout but may be extended to multi-door buildings, such that the navigation would be possible in entire buildings, with elevators and emergency exits taken into account. Indeed, the VirtualEyez system will be extended to support the building with multiple floors.

There is a need for future work to more thoroughly evaluate VirtualEyez, using a larger and more representative sample of sighted, visually impaired and blind individuals. There is also a need to add an obstacle avoidance application to the VirtualEyez system in order to make the system more effective. We would like also to develop this system to support different languages. For example, if a user went to an Arabic grocery store they may want to be able to get items without needing to understand the labels of the products. In general, we hope to improve the application in ways that are in direct response to feedback we received in this study.

Acknowledgment

We thank the Canadian National Institute for Blind, and especially the study volunteers. We also gratefully acknowledge support from Taif university and Saudi Bureau in Canada.

References

[1] WHO, "Visual impairment and blindness," August 2014. [Online]. Available: http://www.who.int/mediacentre/. [Accessed 12 October 2014]. View Article

[2] Lighthouse International, "Causes of Vision Impairment," 2014. [Online]. Available: http://www.lighthouse.org/. [Accessed 13 October 2014]. View Article

[3] P. E. Lanigan, A. M. Paulos, A. W. Williams and P. Narasimhan, "Trinetra: Assistive Technologies for the Blind," in International IEEE-BAIS Symposium on Research on Assistive Technologies (RAT), Dayton, OH, 2006. View Article

[4] A. King, "Blind people and the world wide web," June 2008. [Online]. Available: http://www.webbie. org.uk/. [Accessed 20 August 2014]. View Article

[5] National Federation of the Blind (NFB), "Frequently asked questions," 2014. [Online]. Available: https://nfb.org/Frequently-asked-questions. [Accessed 13 October 2014]. View Article

[6] The International Braille and Technology Center Staff, "Accessible Cell Phone Technology," 2006. [Online]. Available: https://nfb.org/images/nfb/publications/bm/bm06/ bm0606/bm060608.htm. [Accessed 14 October 2014]. View Article

[7] V. A. Kulyukin and C. Gharpure, "Ergonomics-for-One in a Shopping Cart for the Blind," in Proceedings of the 2006 ACM Conference on Human-Robot Interaction, Salt Lake City, USA, 2006. View Article

[8] V. Kulyukin, C. Gharpure and C. Pentico, "Robots as interfaces to haptic and locomotor spaces," in Proc. ACM/IEEE Int. Conf. Hum.-Robot Interact., 2007. View Article

[9] R. Ivanov, "Indoor navigation system for visually impaired," in Proc. Int. Conf. on Computer Systems and Technologies, 2010. View Article

[10] D. López-de-Ipiña, T. Lorido and U. López, "BlindShopping: Enabling accessible shopping for visually impaired people through mobile technologies," in ICOST 2011: 9th International Conference on Smart Homes and Health Telematics, Montréal, Canada, 2011. View Article

[11] P. Upadhyaya, "Need Of NFC technology for helping blind and short come people," International Journal of Engineering Research & Technology (IJERT), vol. 2, 2013. View Article

[12] S. Krishna, V. Balasubramanian, N. C. Krishnan and T. Hedgpeth, "The iCARE ambient interactive shopping environment," in 23rd Annual International Technology and Persons with Disabilities Conference (CSUN), Los Angeles, CA, 2008. View Article

[13] S. Karpischek, F. Michahelles, F. Resatsch and E. Fleisch, "Mobile Sales Assistant - An NFC-Based Product Information System for Retailers," in First International Workshop on Near Field Communication, 2009. View Article

[14] M. Harjumaa, M. Isomursu, S. Muuraiskangas and A. Konttila, "HearMe: A touch-to-speech UI for medicine identification," in 5th International Conference on Pervasive Computing Technologies for Healthcare PervasiveHealth and Workshops, 2011. View Article

[15] H. McLean, "French retailer tests NFC as aid for visually impaired shoppers," 6 September 2011. [Online]. Available: http://www.nfcworld.com/2011/09/06/39711. [Accessed 29 August 2014]. View Article